These days, creating your own AI bot seems like an evening project. LLM models, ready-made frameworks, and no-code tools promise: “Plug it in — and you’re done.” Bots for customer support, content generation, or education all look equally easy to build. But there’s a catch: building a bot is easy. Building a useful one is not.

These days, creating your own AI bot seems like an evening project. LLM models, ready-made frameworks, and no-code tools promise: “Plug it in — and you’re done.” Bots for customer support, content generation, or education all look equally easy to build. But there’s a catch.

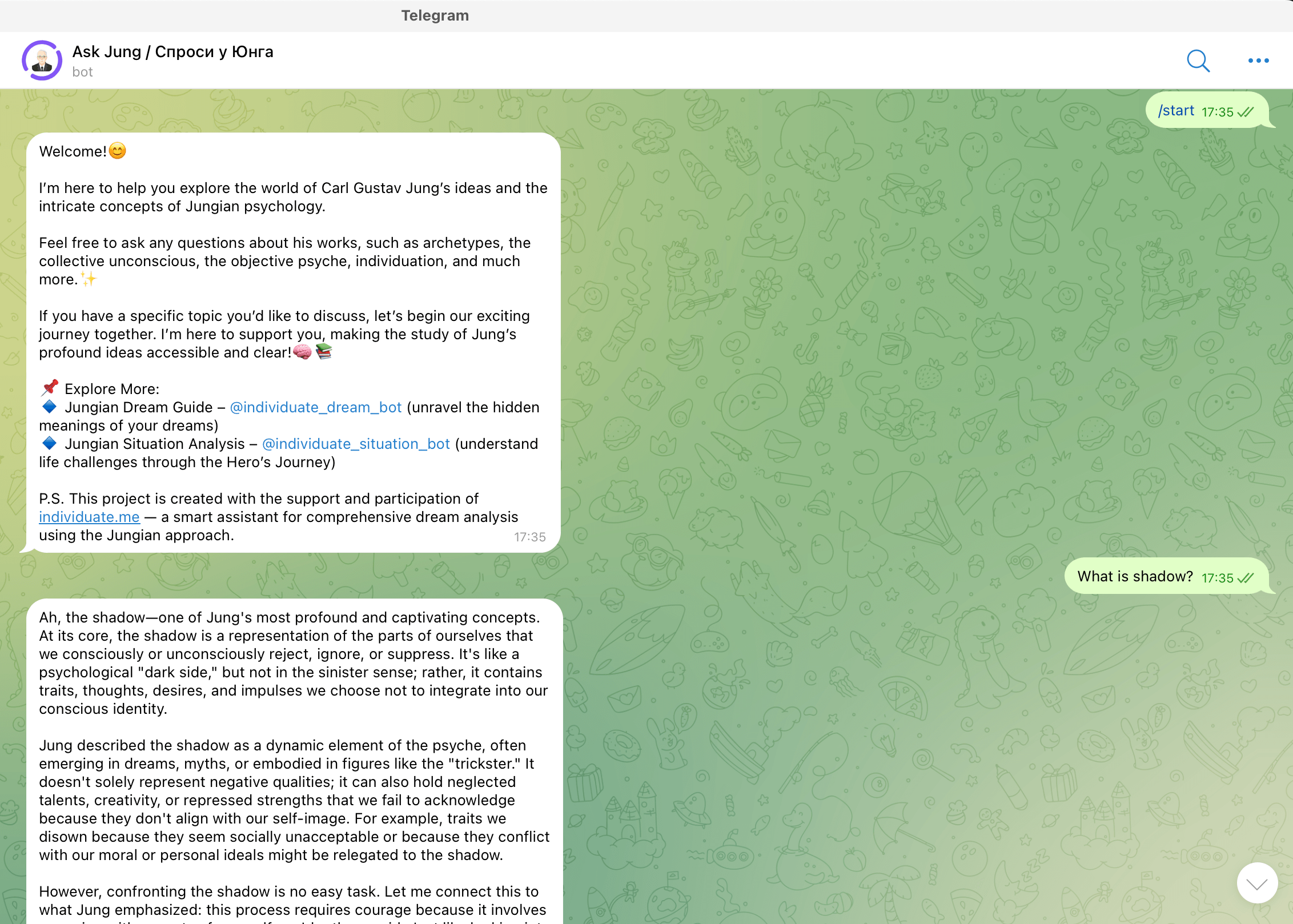

Building a bot is easy. Building a useful one is not. This case is about how we created a small but smart chatbot focused on Jungian psychology — and why even a pet project can suddenly turn out to have production-level complexity.

Why create a bot based on Jung?

First, we wanted to master n8n, a no-code automation tool — not on an abstract task, but within a meaningful, real-life project. We already had solid expertise in machine learning and a long-standing interest in language models. We’ve been closely following the development of tools like LangChain, RAG architectures, and open-source solutions. For us, it wasn’t just about “playing around” — we wanted to build a fully functioning pipeline from data to output, where the LLM is just one part of the system.

Second, we already had context. One of our co-founders, Evgeny Smirnov, hosts a podcast called There Is No Spoon, dedicated to psychology, and runs a Telegram community where Jungian topics are regularly discussed.

Third, Jungian psychology is the perfect challenge for AI. It’s a field where the texts are packed with references to mythology, philosophy, and religion, and require a high level of cultural literacy. Even for a thoughtful human reader, it’s not always easy to grasp what Jung meant. Translating these ideas into clear, everyday language is a task not everyone can handle — let alone a bot.

Jung’s work sits at the intersection of psychology, philosophy, theology, and mythology — making it both fascinating and incredibly challenging for AI to interpret correctly. The bot had to be more than just technically functional; it needed deep contextual understanding of some of the most complex ideas in psychological theory.

Evgeny Smirnov, Co-founder

Evgeny Smirnov, Co-founder

And finally — as is often the case in ML projects — the hardest part isn’t building a prototype, but turning it into a working product. The gap between a demo and a production-ready solution is one of the most common reasons for failure. This bot became our way to test how quickly, reliably, and effectively we could bridge that gap on our own.

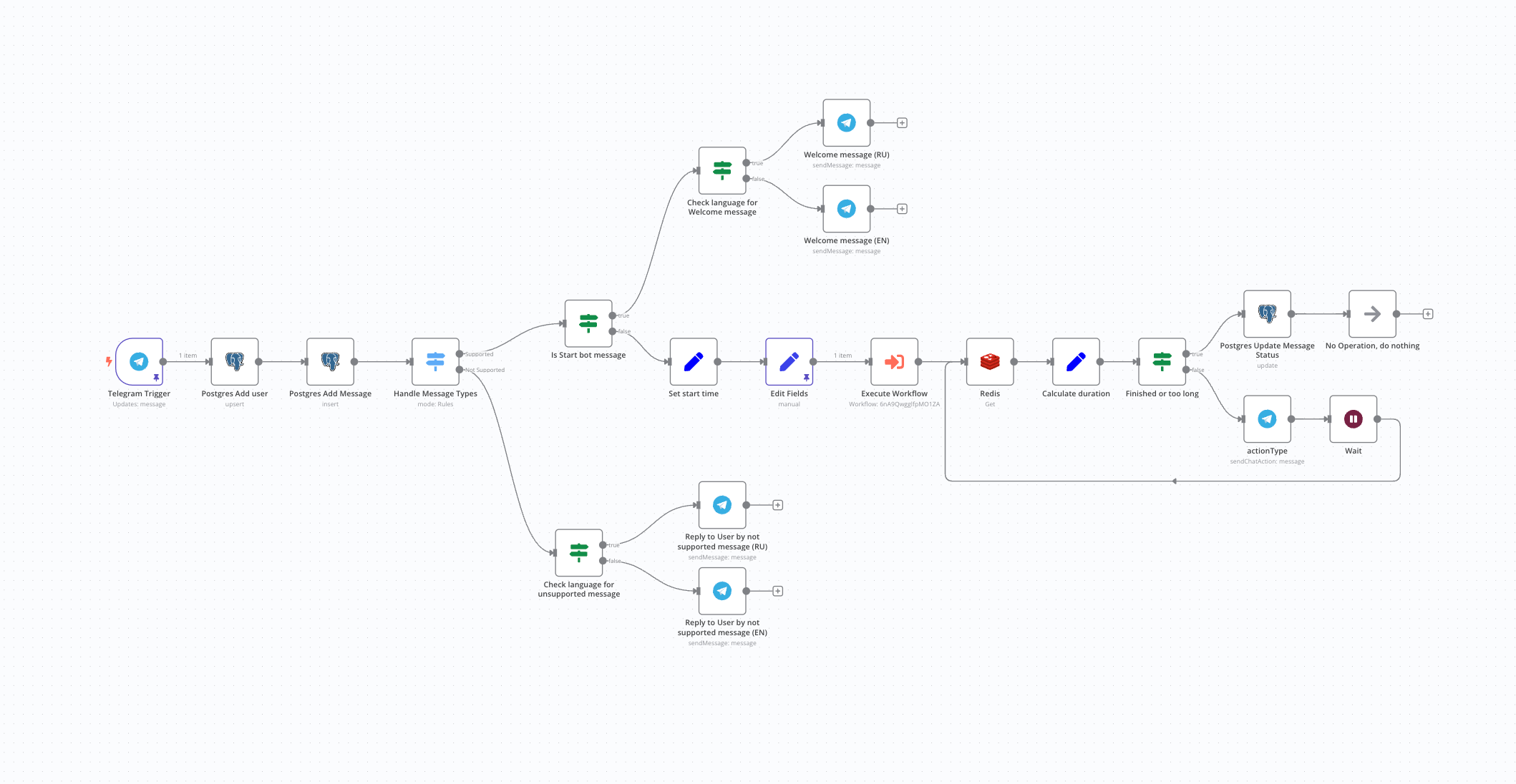

Architecture: Elegant on paper, tricky in practice.

To build the bot, we chose the RAG (retrieval-augmented generation) architecture — an approach where the language model (LLM) doesn’t answer “off the top of its head,” but instead receives relevant fragments from a knowledge base as input.

The tech stack was fairly accessible:

- n8n — for no-code automation and integration with Telegram

- Langchain + LangGraph + markdownit + tons of other tools — working in answer generation mode

- Smart output parser — for providing substantial results, their self-assessment (to avoid “hallucinations”), and complex source analysis

- Knowledge base — curated excerpts from Jung’s writings

The bot didn’t have a custom interface — it was simply a Telegram chat. But it could easily be integrated into Discord, embedded on a website, and so on.

Where the real work begins

The real challenges started at the stage where everything seemed clear.

Copyright issues. Jung’s Collected Works — our main source — is still under copyright. That meant we couldn’t use his texts in the bot.

Finding copyright-free texts. We discovered several of Jung’s early works in German through the Gutenberg Project. These were in the public domain — but they were in German.

Translation and processing. We decided to translate the texts — not manually, but using a language model. Yes, it’s a compromise. But for our goals, it was good enough. Furthermore, we’ve created “rich data,” containing not only texts but also other substantial information including notions, relations, and other important parameters.

Finding relevant fragments. A language model can’t process an entire book at once. There’s a limit to how many tokens it can handle. So we had to learn how to extract just the right passages to include in a query. That’s a discipline of its own: how to find exactly what matters in a large body of text. Ranking, relevance, evaluation, and self-evaluations are essential at this step.

The “reasonable nonsense” problem. A model can sound intelligent while producing total nonsense. At one point, our system started generating answers that looked like real Jungian insights — but in fact were just coherent-sounding gibberish. Only someone deeply familiar with Jungian psychology could tell the difference. After experimenting with different models and carefully tuning the workflows and prompts, the output quality improved significantly.

It isn’t about the LLM — it’s about the expertise.

If you don’t have someone who understands what’s written, you won’t know whether the bot is actually helpful or just “writing in the same style.”

Moreover, to prevent the bot from “preaching” or generating nonsense, we:

- Limited its capabilities — it only responds based on the provided texts

- Forbade it from “thinking for itself”

- Set up a fallback: if there’s no answer, the bot honestly says so

This goes against the classic GPT logic, where everything should be “confident and polished.” But in a real product, an honest “I don’t know” is better than made-up answers without any source.

As a result, the bot can respond to questions at different levels of difficulty and adjust its answers according to the user’s level of understanding. A user can ask the bot to explain something in simple terms or, conversely, to dive deeper into the terminology. Those who study Jung’s works deeply might ask: “What, according to Jung, complements the Ego in the external world to achieve wholeness?” or “Does the transcendent function arise when confronted with the belief in a just world?” Meanwhile, those interested in the most popular concepts ask questions like “When does the integration of the Shadow occur?”

Telegram and the details no one thinks about

All of this was implemented in Telegram. Integration took just a couple of minutes — n8n supports it out of the box. But even here, there were some nuances.

For example: the Telegram “typing…” animation lasts only 4 seconds. Our bot sometimes took longer to “think.” We had to use some tricks so users would see that the bot was still “thinking” and hadn’t crashed.

What we achieved

The “Ask Jung” project became:

- A Telegram chat bot with real users, gaining popularity in psychology communities

- A tool for exploring complex humanities texts

- A demonstration of how knowledge and technology can work together

Users receive answers to questions of varying complexity, and we’ve greatly improved our skills in creating smart and useful chatbots using LLMs and no-code tools like n8n.

Five key points to keep in mind when creating an AI chatbot

- It’s not just about the model A good chatbot needs good content. You have to really know the topic and pick your sources carefully. Extremely carefully.

- Expertise matters You cannot develop a good solution without being able to assess substantially the results. No expertise in the field — no real usefulness.

- Check the rights Make sure you’re allowed to use the texts you give your bot. Some books or articles might still be under copyright. Information is gold, especially these days!

- The model can’t do everything Big models can’t read a whole book at once. You need to help them by picking the right pieces of text to show them.

- Don’t let it make things up Set limits. It’s better if the bot says “I don’t know” than gives a wrong or random answer.

- Small things matter for users Even little details — like showing the bot is “typing” — help users trust it. Also, make sure it can talk to both beginners and experts in a way they understand.